In the rapidly evolving landscape of remote sensing and urban planning, a groundbreaking study led by Jinhe Su from the School of Computer Engineering at Jimei University has introduced a novel framework that promises to revolutionize semantic segmentation in urban scenes. Published in the prestigious *Nature Scientific Reports* (translated to English as *Scientific Reports*), this research leverages the power of large language models (LLMs) to construct a universal knowledge graph, significantly enhancing the adaptability and robustness of semantic segmentation tasks.

Urban scene segmentation is a critical component in 3D city modeling and various remote sensing applications, including urban planning and environmental monitoring. Traditional methods often rely on dataset-specific knowledge graphs, which limits their generalizability across diverse remote sensing data. Su’s research addresses this limitation by proposing a framework that employs LLMs to extract cross-domain semantic relationships and build a universal knowledge graph. This graph is then incorporated into remote sensing semantic segmentation tasks, thereby enhancing the semantic understanding of urban scenes.

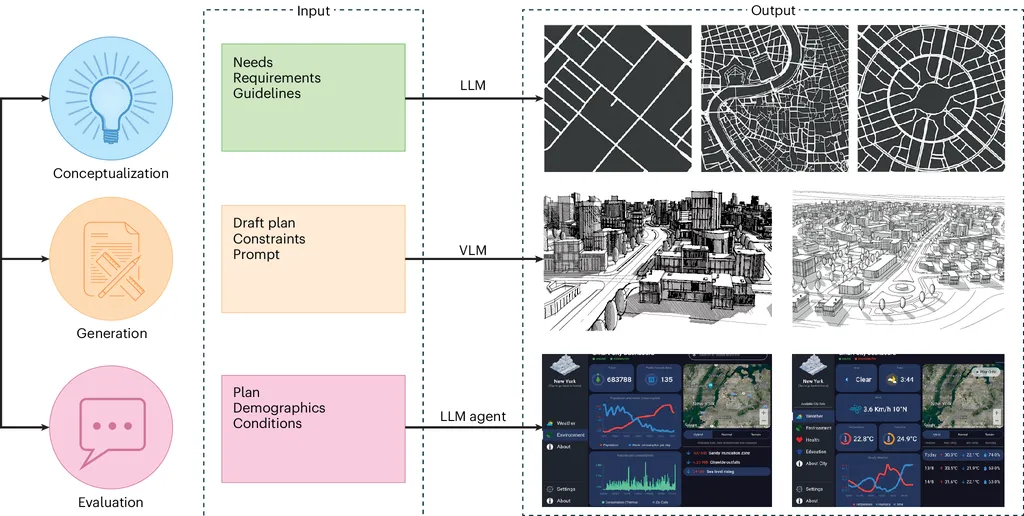

The framework consists of two key components: the Graph Construction module and the Knowledge Graph Fusion module (KGFusion). The Graph Construction module utilizes LLMs to extract semantic relationships from multi-source geospatial data, creating a universal knowledge graph. The KGFusion module then integrates this graph into a semantic segmentation network, improving the accuracy and adaptability of the segmentation process.

To evaluate the adaptability of their method across diverse domains, the researchers curated a mixed dataset encompassing urban, rural, and port scenes. The experimental findings are impressive, with the method achieving 70.94% mean Intersection over Union (mIoU) on the UAVid dataset and 63.23% on the Mixed dataset. These results represent a significant improvement over baseline methods, outperforming them by 0.43% and 1.04%, respectively.

“This research demonstrates the potential of LLMs in enhancing the semantic understanding of urban scenes,” said Jinhe Su. “By leveraging the power of LLMs, we can create a universal knowledge graph that adapts to diverse remote sensing data, making our method more robust and versatile.”

The implications of this research are far-reaching, particularly for the energy sector. Accurate urban scene segmentation is crucial for planning and monitoring energy infrastructure, such as solar farms, wind turbines, and smart grids. By improving the adaptability and robustness of semantic segmentation tasks, this research can enhance the efficiency and effectiveness of energy planning and monitoring, ultimately contributing to a more sustainable and resilient energy infrastructure.

Moreover, the use of LLMs in constructing a universal knowledge graph opens up new possibilities for cross-domain applications. As Su explained, “Our method can be applied to various remote sensing tasks, including environmental monitoring, disaster management, and urban planning. The potential for broader applications in complex urban environments is immense.”

In conclusion, the research led by Jinhe Su represents a significant advancement in the field of remote sensing and urban planning. By leveraging the power of LLMs, this study has demonstrated the potential to enhance the adaptability and robustness of semantic segmentation tasks, paving the way for more efficient and effective energy planning and monitoring. As the energy sector continues to evolve, the insights and innovations from this research will undoubtedly play a crucial role in shaping the future of urban environments and energy infrastructure.