In the high-stakes world of industrial safety, a groundbreaking advancement has emerged from the labs of China University of Mining and Technology-Beijing. Xin Li, a leading researcher in safety engineering, has introduced ODEM–YOLO, a cutting-edge model designed to revolutionize safety wear detection in cement aggregate production workshops. This innovation, detailed in a recent study published in the Journal of Engineering Science, addresses a critical gap in workplace safety, offering a robust solution to the persistent challenge of monitoring personal protective equipment (PPE) compliance in harsh industrial environments.

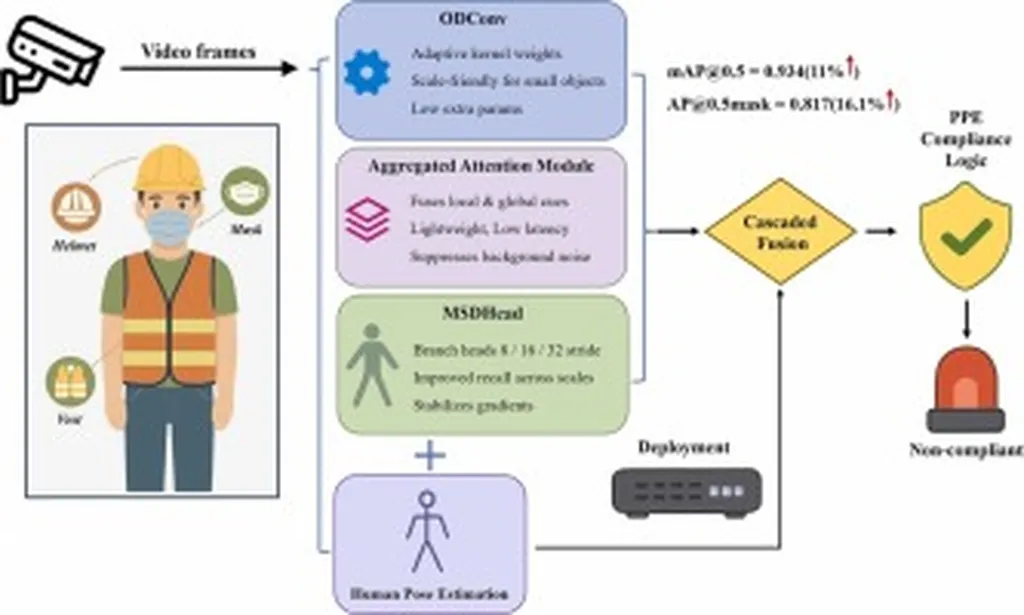

Traditional manual supervision in cement aggregate production workshops often falls short due to the dynamic and hazardous nature of the operations. Artificial intelligence-driven video surveillance has been touted as a potential solution, but existing object detection models frequently falter when identifying small and multiscale targets, leading to high error rates and limited practical effectiveness. Enter ODEM–YOLO, a lightweight yet highly accurate object detection model based on an enhanced YOLOv8 architecture. This model is specifically engineered to overcome these limitations, providing a reliable and efficient means of ensuring worker safety.

“Our goal was to create a model that could accurately detect safety wear in real-time, even in the most challenging conditions,” said Xin Li, lead author of the study and a researcher at the School of Emergency Management and Safety Engineering, China University of Mining and Technology-Beijing. “The ODEM–YOLO model incorporates several key innovations that set it apart from existing solutions.”

At the heart of ODEM–YOLO lies the omni-dimensional dynamic convolution (ODConv) module, which employs a multidimensional attention mechanism to dynamically learn kernel weights across various dimensions. This adaptive focus on salient features enhances the model’s ability to discern small targets in complex scenes. Additionally, the Neck network is optimized with an improved efficient multiscale attention (iEMA) mechanism, which strategically uses 1 × 1 pointwise convolutions and 3 × 3 depth-wise separable convolutions for efficient spatial feature learning. This allows the model to capture and fuse multiscale contextual information with significantly reduced computational complexity.

One of the most innovative aspects of ODEM–YOLO is the C2f multi-scale edge information enhancement (C2f_MSEIE) module. This module replaces original C2f blocks and is designed to enhance target edge information for clearer boundary definition. It comprises a local convolution branch for preserving fine-grained details and a multiscale edge modeling branch that utilizes AdaptiveAvgPool2d with multiple bin sizes and an innovative Edge Enhancer submodule to extract and reinforce high-frequency edge features.

The efficacy of ODEM–YOLO was rigorously validated on a custom dataset of 9877 images from actual cement aggregate workshops, featuring diverse small and multiscale targets under realistic and challenging conditions. The experimental results demonstrate ODEM–YOLO’s superior performance, achieving an overall mean average precision ([email protected]) of 0.896 and an [email protected] (for the challenging small “mask” objects) of 0.746. Despite these significant accuracy gains, the model maintains a compact size of only 6.9 MB and achieves a rapid single-image processing time of 8.2 ms (utilizing 9.5 GFLOPs), outperforming other mainstream lightweight models such as YOLOv5n and YOLOv10n.

The implications of this research are far-reaching, particularly for the energy sector, where workplace safety is paramount. The ability to accurately and efficiently monitor PPE compliance can significantly reduce the risk of accidents and enhance overall safety protocols. “This technology has the potential to transform safety monitoring in high-risk industries,” said Li. “By providing real-time, accurate detection of safety wear, we can help prevent accidents and save lives.”

The practical deployment of ODEM–YOLO on an NVIDIA Jetson Nano B01 embedded device demonstrated its capability of real-time detection at 25 frames per second, fully satisfying the demanding requirements of industrial on-site safety monitoring. This breakthrough not only enhances occupational safety but also sets a new standard for the future of AI-driven safety solutions.

As the energy sector continues to evolve, the need for advanced safety technologies becomes increasingly critical. ODEM–YOLO represents a significant step forward in this arena, offering a reliable and efficient means of ensuring worker safety in some of the most challenging environments. With its innovative architecture and superior performance, ODEM–YOLO is poised to shape the future of safety wear detection, paving the way for a safer and more secure industrial landscape.

Published in the Journal of Engineering Science (工程科学学报), this research underscores the importance of continuous innovation in the field of safety engineering. As industries strive to meet the demands of a rapidly changing world, technologies like ODEM–YOLO